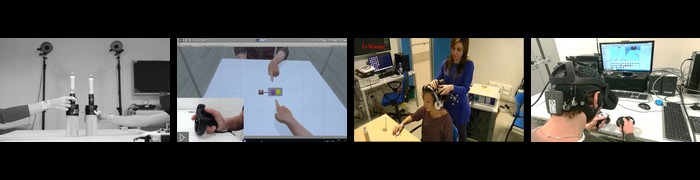

Advancement in brain computer interfaces (BCI) technology allows people to actively interact in the world through surrogates. Controlling real humanoid robots using BCI as intuitively as we control our body represents a challenge for current research in robotics and neuroscience. In order to successfully interact with the environment the brain integrates multiple sensory cues to form a coherent representation of the world. Cognitive neuroscience studies demonstrate that multisensory integration may imply a gain with respect to a single modality and ultimately improve the overall sensorimotor performance. For example, reactivity to simultaneous visual and auditory stimuli may be higher than to the sum of the same stimuli delivered in isolation or in temporal sequence. Yet, knowledge about whether audio-visual integration may improve the control of a surrogate is meager. To explore this issue, we provided human footstep sounds as audio feedback to BCI users while controlling a humanoid robot. Participants were asked to steer their robot surrogate and perform a pick-and-place task through BCI-SSVEPs. We found that audio-visual synchrony between footsteps sound and actual humanoid's walk reduces the time required for steering the robot. Thus, auditory feedback congruent with the humanoid actions may improve motor decisions of the BCI's user and help in the feeling of control over it. Our results shed light on the possibility to increase robot's control through the combination of multisensory feedback to a BCI user.

Front Neurorobot. 2014 Jun 17;8:20. doi: 10.3389/fnbot.2014.00020. eCollection 2014